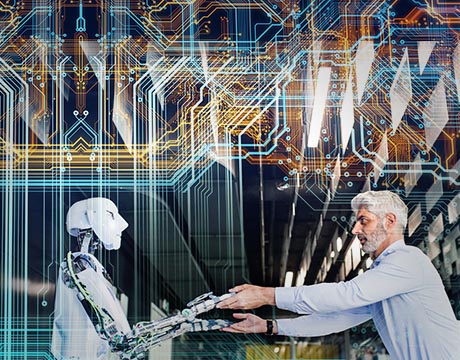

Do Robots Deserve Legal Rights?

Do Robots Deserve Legal Rights?

Saudi Arabia made waves in late 2017 when it granted citizenship to a humanoid robot named Sophia, developed by the Hong Kong-based Hanson Robotics. What those rights technically include, and what the move might mean for other robots worldwide, remains unclear. But the robot itself wasted no time in taking advantage of her new, high profile to campaign for women’s rights in her adopted country.

This would be the same Sophia that, in a CNBC interview with her creator, Dr. David Hanson, said that she would “destroy all humans.”

So, granting legal rights to robots clearly remains a complicated subject, even if it is done primarily as a PR stunt to promote an IT conference, as was the case in Saudi Arabia.

But that hasn’t stopped a long list of voices in this country and abroad from arguing both for and against the creation of a set of “rights” for robots, based on a variety of concerns. Is robotics beginning to outpace our existing system of ethics and regulations that require a new system to deal with these issues before they surpass what we can control? Or does creating a set of machine-specific rights for these inventions place too much emphasis on robots as a sort of life form?

So far the idea has generated a lot of discussion, but few researchers are throwing their support behind robots as a legally protected class on par with humans.

"Let’s take autonomous cars,” says Avani Desai, principal and executive vice president of independent security and privacy compliance assessor Schellman & Company. “We have allowed computers to drive and make decisions for us, such as if there is a semi coming to the right and a guard rail on the left the algorithm in the autonomous car makes the decision what to do. But if you look back, is it the car that is making the decision or a group of people in a room that discuss the ethics and cost, and then provided to developers and engineers to make that technology work?”

Robots might use machine learning to refine their algorithms over time, he argues, but there is always an engineer who originally coded that information into their databanks. So, the morals, ideals, and thoughts of that engineer could be coded into a robot that is going to potentially make decisions that could affect the population at large.

From a legal perspective, then, who would face trouble in the case of litigation related to a decision that a robot made? Would it be the engineer, the manufacturer, or the retailer who sold and serviced that robot? Or would it be the robot itself?

“We see this issue with autonomous cars and the legal cases we have seen and it has come back to the automobile manufacturer due to a flaw in the algorithm,” Desai says. “Until we as a society fully understand the implications of robots, the technology and the decision-making process, we should not apply the same rights we apply to humans to robots.”

Whose Rights?

The analogy of the car is a good one, says Chris Roberts, the head of industrial robotics at Cambridge Consultants, in part because both are expensive, complex and valuable machines. They both need to be insured and protected in roughly the same way.

And neither deserve their own set of legal rights.

“I work with cutting-edge robotics and neural networks every day, and while the technology is really exciting and we can routinely do things that were science fiction only a few years ago, we're a long, long way from creating sentience and having to worry about the moral rights of the machine, he says.“Sophia may look like a person, and respond in ways that mimic human responses, but it is just a machine.Facial recognition, neural networks, speech synthesis and so on can look convincing, but they're not intelligent, just good at doing a particular task, in this case responding to questions.”

Despite those arguments, there are still moral questions around robotics, including the ethics of using machines to make decisions about determining whether or not to program them to save only humans instead of other animals. With robots, a lot of the moral questions revolve around the work they are doing and the jobs they are replacing. If a robot comes in and replaces an entire class of workers overnight, what moral duty do we as a society have to help the people who are directly affected by this change? What sort of retraining and support should we offer them?

“It's the rights of the people that are much more important than the rights of the machine,” Roberts says.

Beyond the Machines

At its core, the question of robot rights is less about what those potential rights should look like and more about why we would want to protect them as a group at all. Is it in their interest or ours?

At lot of it comes down to fear, according to Dr. Glenn McGee, an expert in bioethics and a professor at the University of New Haven. Fear that the robots won’t need us anymore. Fear that they will get smarter than we are. Fear that these new creations will rise up and come get us.

And we’ve seen this before.

“It’s like when the Europeans first came to the Americas and the colonies began to rebel,” he says. “We’ve historically always begun with the assertion that if you give rights to something, something that didn’t have rights before, you’re doing so because that will enable them to function without coming to get you. I think a lot of the discussionaround rights for robots or entitlements that robots have come less from our sense as a society than the idea that we better get this right or else.”

For example, if we encountered an alien race we wouldn’t expect them to follow the set of laws and standards that we as humans do. We’d look at them through an anthropological lens first to see what it means for these other creatures to interact with our world and what we need to do to protect ourselves.

“We're not talking about whether or not creatures who otherwise would have a soul will have more of one if we give them rights,” Dr. McGee says. “We're really describing whether or not the designed creature, the robots that sort of become animal, whether or not the design will afford them the ability to interact with us in ways that make us feel better.”

Tim Sprinkle is an independent writer.

We're really describing whether the robots that sort of become animal, whether or not the design will afford them the ability to interact with us in ways that make us feel better.Dr. Glenn McGee, University of New Haven