Empathetic Robots

Empathetic Robots

If machines are to co-exist with humans, they need to “understand” some basic social rules and be programmed in a way that takes human perception into account.

Then the same robot is set to its emotive mode. It takes a socially cognizant path, slowing down as it nears the participant and gingerly extends its camera to see the person.

These scenarios are part of a 2009 study, published in the Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, where robotics researchers simulated a search and rescue mission, asking participants to pretend they were lying in the debris of a collapsed building. Given people’s frazzled nerves in difficult situations, it is important to recognize that robots are not just machines providing services for their human controllers. Robots will emotionally impact the people they encounter.

During a crisis, we need to be especially kind to other humans and so do our robots. Empathy is the form of social intelligence that robots should prioritize right now.

The present, ongoing crisis of the coronavirus pandemic has produced a dozen simultaneous stresses in the lives of almost everyone on the planet. There is the mortal fear of getting sick from COVID-19 and perhaps dying. There is the stress of isolation as individuals stay at home or stay distanced from one another when they venture out. In addition, people are battling a myriad of additional stressors: In the first months of the pandemic, approximately 22 million Americans had sought some form of aid or filed for unemployment, and school closures affected more than 55 million students around the country.

Add in concerns surrounding care for the elderly and the hardship of acquiring groceries and medicine in localities hit with lockdowns, and the stress can build up quickly.

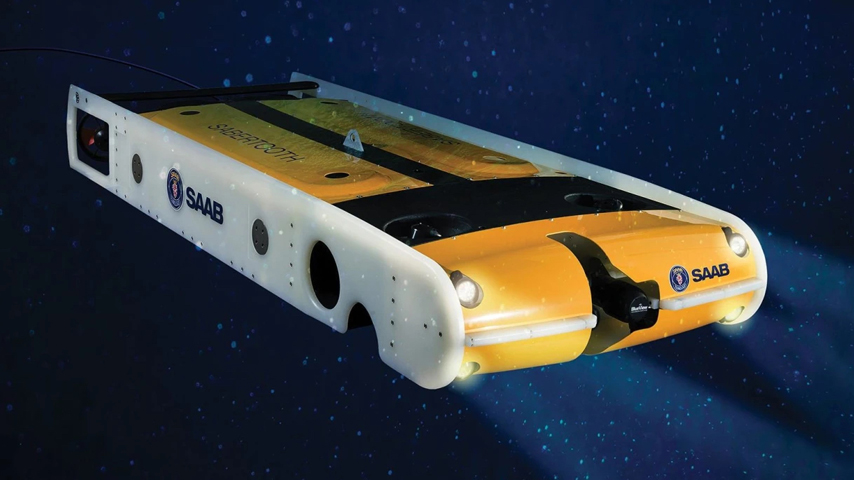

In the face of this crisis, technologists see automation as a means to reduce these burdens. In a March 25 editorial in Science Robotics, several roboticists argue, “Robots have the potential to be deployed for disinfection, delivering medications and food, measuring vital signs, and assisting border controls.”

Or as Ryan Hickman, technical program manager at Google Research, put it, “If there ever was a time when we needed more robots, it's now.”

Those robots, once deployed, won’t be judged solely on how well they do their jobs. Humans are highly attuned to how robots act, interpreting their behaviors along the same vectors we judge other people’s actions, or even the actions of a person driving a robot. The machines don’t have to exhibit physical human-like characteristics; people intuitively interpret robots in close proximity in ways they would each other.

That’s because our brains are great storytellers. For example, when in 2017 a Knightscope security robot accidentally drove itself into a fountain at a Washington D.C. shopping mall, the international headlines declared that the robot had committed suicide. Humans, universally, think of robots as social actors. As a result, the simplest robots can provoke complicated feelings in people. (Before my engineering classes moved to full-time remote learning in the spring, I ordered Sphero BOLT robots, which students could work on mechanical, electrical, and software challenges from their bedrooms and kitchen tables; the idea was to give them something of a robot roommate they could use in projects that would take them away from a full day of classes on the screen.)

If machines are to co-exist with us, they need to “understand” some basic social rules and be programmed in a way that takes human attributions into account. For a delivery robot to successfully share a sidewalk with humans, it will need to be encoded to know the rules of the sidewalk. How should it behave if someone is on its path? Should the robot just wait for the person to go around them, turn around, or cross the street to find another path?

Such storytelling is less likely to matter if a robot operates at a distance from humans. But in the case of disasters, like the one we are currently facing, people would particularly benefit from having machines integrate the human experience into their behavior.

Taking One’s Medicine From a Robot

In spite of the general orders to maintain a social distance to limit the spread of COVID-19, medical professionals and those who work to support them on the front lines are faced with situations where such distancing is impossible. By employing robots as a support system in hospitals, we may be able to reduce the likelihood of infecting heath care workers, while also alleviating some of their burden. Robots can take on some of the backbreaking labor for hospital staff, such as transporting medical samples and linens from one place to another, sanitizing facilities and scanning for fevers.

When it comes to interfacing with patients—such as handing off medicine or adjusting someone’s bed—utilizing robots, however, means missing an opportunity for human connection, an experience that could have both mental and physical ramifications for patients. Research has shown that people are more likely to follow directives given, or take their medicine as prescribed, by a doctor if patients have a positive relationship with their physician. The rapport-building conversations so common in healthcare and dentistry actually have medical significance. This is important to remember if we use robots in healthcare settings. Empathetic robots with good bedside manner might have a positive impact on patient behavior relative to ones designed without human psychology in mind. They would also be more pleasant to have around.

More About Medical Robotics: Star Wars Inspires a Mind-Controlled Prosthetic

Good bedside and conversational manners work for more than in healthcare facilities. Robots could be allies in keeping us motivated in following through with a healthy regiment in quarantine. Like many who are now working from home, I’m moving less and have to put in extra effort to exercise. Without in-person classes to attend, we’re exploring new ways of staying physically healthy like making use of online Zumba or yoga classes, running marathons in our kitchens, or following “prison workouts.” Despite our good intentions, however, it’s hard to stay motivated without support. Luckily, research shows that robots could help us stick to our plans.

In 2008, Cory Kidd, founder and CEO of Catalia Health, a company that provides interactive robotic companions, used robots to motivate people with their fitness and dieting plans. One of the simplest ways to follow through with a health regimen is to have people track their diet and exercise. But Kidd hypothesized tracking with a social robot, much like exercising with a friend, would make it easier for people to record their progress. He conducted an experiment in which participants were assigned to one of three groups: those who had a robot for tracking, those with a tablet-based tracker that worked like an app, and those who were equipped with just pen and paper. Participants with a robot buddy inputted data on a touchscreen on the robot’s belly while the robot made eye contact and verbalized its requests for exercise and food intake information. Of the three groups, the study subjects with robots were most enthusiastic about their experience, while those who used a pen and paper were least likely to track their diet and exercise regularly. Further, people partnered with robots illustrated social bonding with the very simple machines, with many naming or dressing them in costumes. This group was also significantly more likely to elect to continue the study for an extra two weeks, without additional compensation.

The study is an example of how, as people, we enjoy robots and experience them socially. In Western countries, developers often conceptualize robots as functional machines only. But research shows that having better social and personality interfaces will often influence a robot’s success with particular individuals.

As the search-and-rescue simulation I mentioned earlier illustrates, even robot body language is important. Researchers have also demonstrated that robot non-verbal communication channels can actually influence whether people respond to a robot’s request. In an experiment in which robots encouraged people to perform physical therapy exercises, researchers found that extraverted people completed more exercises with extraverted communication-style robots, whereas introverted people engaged in significantly more exercises with introverted robots. Similar to how one would have to find a trainer that they click with, a person needs to match with a robot health coach who possesses compatible qualities.

You’ve Got a Friend

With little to no physical connection to other humans, millions of currently housebound people are finding themselves yearning for companionship. Connecting with others is an essential part of being human; we are a species that generally lives in groups and research indicates that isolation doesn’t suit us and can lead to depression. To that end, although not robots, products that can better connect us are already experiencing a surge in usage, while new ones are popping up. An example is Houseparty, a newly popular social app with two million downloads in March, where people can video chat with friends and family, play digital games and pop in and out ongoing conversations. Taking social technologies further, one could think of a scenario in which a Houseparty gathering meant they had an avatar robot in a friend’s home.

Telepresence robots are used to provide a physical presence to a remote user, an idea that some refer to as “Skype-on-a-stick.” In many versions, a video screen is placed at a good conversational height above a mobile base, which a remote person can drive around; the remote person uses the robot to converse and interact with the person in the same room as the robot. Telepresence robots had a business surge about a decade ago, inspiring several research investigations, but have yet to be widely adopted. So far, in research, telepresence robots have been investigated for facilitating remote collaboration, improving remote patient-doctor communication, and maintaining connections in long-distance relationships. (To be fair, not all couples enjoy having their remote partner log into a robot and check in on them at any instant.)

Editor's Choice: Robotics Blog: Making Robots Ubiquitous Post-Pandemic

With many people currently housebound, it’s possible there will be a resurgence of telepresence robots. The Belgian company ZoraBots, for instance, donated 60 telepresence bots to help connect people in nursing homes and assisted living facilities with their families. The robots, which have a screen on their heads as an interface, use Facebook messenger for the elderly to communicate with loved ones who cannot visit. (A 2011 study found that for older adults, the top potential use for telepresence robots would be “visit” their grandchildren.)

Whatever one’s age, using telepresence robots to safely visit friends or to attend an event with loved ones is a science-fiction sounding option that makes more and more sense in these times.

Get the Door, It’s Pizza Bot

When nonessential stores and restaurants closed to reduce the spread of the coronavirus, one industry that filled the holes was home delivery of packages, groceries, or that piping hot pizza. But with some of the workforce falling ill, even that sector is becoming strained.

In recent years, companies big and small had been invested in logistics robots, those that could help store, organize and retrieve products. For example, Amazon already had a research division dedicated to robots, including ones intended to fly packages to customers’ doors. According to Ryan Gariepy, chief technology officer of Clearpath Robotics, the present situation has accelerated the timetables some companies had for incorporating robots. “There is high interest from companies right now to integrate robots that they trust will work,” Gariepy said.

Using robots in factories and warehouses could enable smaller crews of people to complete the work and aid in quicker delivery of items, as long as those robots can safely and reliably operate around people. To be most effective in such a short timeline, these factory robots must fit into existing processes, which often means interoperating with people.

For example, a pizza shop in Tempe, Ariz., pivoted to delivering food via robots from Starship Technologies. An employee sanitizes the inside and outside of the robot, places the pizza inside a clean compartment. The robot will deliver the food to customers within a half mile range of the restaurant, essentially replicating the role of the delivery person.

While companies like Starship Technologies had been deploying their home deliveries since 2017, their robots are limited to traveling on sidewalks and paved streets; the robots stop at the front yards of customers. Agility Robotics, on the other hand, proposes to deploy robots that can cross the yard and climb steps to get to the door. The technology still has a few challenges to overcome. For one, such paths are highly unpredictable for robot navigation. But also, robots that enter private spaces will have to display empathetic cues to be accepted. They will have to choose considerate paths that demonstrate caution toward a delicate flowerpot, take a wide berth around a child, and maybe say “hello” to a person gardening across the yard. Such small gestures will impact their social acceptance in our private spaces.

While waiting for Tried and True Coffee Company, my favorite coffee shop, to reopen after taking a few weeks to invest in new systems that enable online ordering and safer pickup options, I fantasized about how robot social programming could help that small business pivot to this new normal. For starters, it could partner with DAX, the robot company that is already delivering burritos up the road in Philomath, to bring coffee to my door. (I promise I’ll clean up the yard toys and gardening supplies.) Since service experience is of the utmost importance, I imagined they could add “robot barista” option, allowing for some much-needed conversation while I picked up my coffee.

That last part isn’t entirely fantasy: My colleagues and I have already done research on appropriate casual conversation for robot baristas.

Talk To Me

Talking to robots has long been a staple of science fiction, but how we talk to robots is a more recent topic of study. In 2010, roboticist Alex Reben created a small cardboard robot called BlabDroid. Shaped like a small-scale Wall-E, the robot tottered around and asked people questions in a cute, young person’s voice. Initially set to move around the MIT Media Lab, the pre-programmed robot asked a series of personal questions: A person’s earliest memories, something they were struggling with, their relationship with their parents.

What Reben discovered was that people loved sharing their stories with the robot. They didn’t mind if BlabDroid understood them or not. As Reben described later, the people sharing their stories with the robot enjoyed its curiosity. Some even mentioned liking that it was a robot, because they could disclose their thoughts without being judged. (The work with BlabDroid was filmed and presented at the Tribeca Film Festival as “a documentary robots made about people,” even though a person actually edited all the footage.)

More on This Topic: The Robot Will See You Now

Given the ease with which some people open up to robots, one application that they could be well suited is in facilitating self-therapy. For example, the robots could be used in Freudian-style sessions, where a robot reflects back what the person is saying, leading the person to self-inquire and reflect. While this in no way replaces a human therapist, it could provide us an outlet for sharing our stories, much like keeping a diary.

This might sound vaguely familiar. The approach of rephrasing people’s statements and reflecting them back to people as questions was first utilized by the ELIZA chatbot in 1965. Eliza was an early computer program developed by Joseph Weizenbaum to demonstrate the superficiality of conversations between machines and humans. However, in practice, at least one person found this conversational agent to be rather human. Here is how Danny Bobrow, a pioneer of natural language processing and early AI researcher, tells the story:

“One Saturday morning about 9 am., a Vice President of Bolt, Beranek, and Newman [BBN]... found a note on the PDP-1 teletype that read: ‘TYPE ALL MESSAGES INTO THIS TELETYPE AND END THEM WITH A PERIOD.’ This note was left there by one of the programming bums who had just succeeded in making run a LISP version of the DOCTOR program (based on ELIZA). However, the VP thought that this note indicated that this teletype was linked with the one in my home, since I often use the computer all night. Therefore, he started typing to ‘me,’ but in fact was typing to this version of the DOCTOR program. The following conversation ensued:

VP: Do you think that I can use the computer this morning?

ELIZA: Why do you ask?

VP: I might be able to make some additional Telcom sales.

ELIZA: Why aren't you so sure?

VP: My prospective customers have never seen a demonstration of the system.

ELIZA: Does this mean very much to you?

VP: Of course it does.

ELIZA: You seem very positive about that.

VP: This is ridiculous.

ELIZA: What do you mean, this is ridiculous?

VP: Is there any reason why I can't use the computer now?

ELIZA: Tell me more about your prospective customers.

VP: Please dial me up on 491-1850

Note that after the last remark the VP did not obey instructions and left out the period. Therefore, of course, the computer didn't answer him. This so infuriated the VP, who thought I was playing games with him, that he called me up, woke me from a deep sleep, and said:

VP: Why are you being so snotty to me?

Bobrow: What do you mean I am being snotty to you?

The VP angrily read me the dialog that "we" had been having and couldn't get any response but laughter from me. It took a while to convince him it really was a computer.”

This unintentional situation—much like the Turing test, in which the conversational skills of a human versus a robot are evaluated—illustrates that it doesn’t take much for people to treat simple technologies socially. Although the VP believed he was conversing with a person, as Blabdroids and many other projects illustrate, people will readily talk to robots as long as they can intelligently respond.

The 1996 book, The Media Equation, is full of examples of how people treat computers following human-human social conventions. In one, a person tried not to hurt a computer's “feelings” by giving it higher ratings on its own screen than they did on a separate computer. People treat machines socially without thinking about it; if a robot seems as if it cares about what we are saying, we will open up to it.

At times, it will even be the people asking the machine questions. According to one article, in 2017 Amazon’s Alexa virtual assistant received more than a million marriage proposals. Sadly, the app turned down all its myriad suitors.

Functioning with Humanity

Western cultures often prize robots for their functional roles, but as the coronavirus crisis continues, the ability of the machines to be empathetic may prove to be just as important. Robots are already helping to sanitize spaces, deliver important items, keep our factories operating, and harvest and package our food. In places where such systems interface with people, there is an opportunity to design their interactions with human impact in mind.

At present, if temporarily, we are isolated from each other in common physical space and we are disconnected from loved ones during our major milestones. With nursing homes barring visitors and elderly people generally being cautioned against contact with the outside, many people have been unable to meet their newborn grandchildren.

I am doing my best to get my engineering students to put these words in practice.

I sent the students in my Applied Robotics class home with Sphero BOLT robots, baseball-sized rolling bots reminiscent of BB-8 from the recent Star Wars movies, which they could use to learn about creative approaches to solving problems and innovative applications of their technical skills while away from campus engineering labs. Their first design challenge was to use their robots as intelligent bowling balls, setting up bowling alleys on the dimensions of a yoga mat, and using the cardboard centers of the toilet paper rolls or old soda cans as pins. We encouraged the students to play against the robots, or even include their roommates in playing the game. They were welcome to augment the pins, to make them easier to sense, or to construct additional features like mazes or sliding doors for a more complex challenge. One student made plans to install LEDs in the pins and create a game that can be played in the dark.

In the second half of the term, students prototyped robot application concepts that could positively impact people—physically, mentally, or emotionally—during our current global health crisis.

While robots cannot supplant the empathy people are able to offer each other, they might help protect us from contagion—or at the very least distract our children so we are able to work. As I also homeschool my four- and six- year old children, it is hard not to hope that at least one of those student projects from the lab course will keep my kids practicing their ABCs or help use up some of their abundant energy while I deliver lectures.

Heather Knight is assistant professor of computer science at Oregon State University in Corvallis. Her research interests include human-robot interaction, non-verbal machine communications, and non-anthropomorphic social robots. She also heads up a robot theater company, Marilyn Monrobot, which produces an annual Robot Film Festival.

REFERENCES

Agnihotri, Abhijeet, and Heather Knight. "Persuasive ChairBots: A (Mostly) Robot-Recruited Experiment." 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). IEEE, 2019.

Beer, Jenay M., and Leila Takayama. "Mobile remote presence systems for older adults: acceptance, benefits, and concerns." Proceedings of the 6th international conference on Human-robot interaction. 2011.

Bethel, C. L. and Murphy, R. R., “Non-Facial/Non-Verbal Methods of Affective Expression as Applied to Robot-Assisted Victim Assessment,” in 2nd ACM SIGCHI/SIGART Conference on Human-Robot Interaction (HRI 2007), Washington, DC. March 2007.

Hedaoo, Samarendra, Akim Williams, Chinmay Wadgaonkar, and Heather Knight. "A robot barista comments on its clients: social attitudes toward robot data use." 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, 2019.

Kerse, Ngaire, et al. "Physician-patient relationship and medication compliance: a primary care investigation." The Annals of Family Medicine 2.5 (2004): 455-461.

Kidd, Cory D., and Cynthia Breazeal. "Robots at home: Understanding long-term human-robot interaction." 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2008.

Kraft, Kory. "Robots against infectious diseases." 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, 2016.

Lee, Min Kyung, and Leila Takayama. "Now, I have a body" Uses and social norms for mobile remote presence in the workplace." Proceedings of the SIGCHI conference on human factors in computing systems. 2011.

Mutlu, Bilge, and Jodi Forlizzi. "Robots in organizations: the role of workflow, social, and environmental factors in human-robot interaction." 2008 3rd ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, 2008.

Reben, Alexander, and Joseph Paradiso. "A mobile interactive robot for gathering structured social video." Proceedings of the 19th ACM international conference on Multimedia. 2011.

Tapus, Adriana, Cristian Ţăpuş, and Maja J. Matarić. "User—robot personality matching and assistive robot behavior adaptation for post-stroke rehabilitation therapy." Intelligent Service Robotics 1.2 (2008): 169.

Yang, Lillian, Carman Neustaedter, and Thecla Schiphorst. "Communicating through a telepresence robot: A study of long distance relationships." Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems. 2017.